When insights aren’t enough: how SaaS & AI companies who are paid to deliver insights get hurt by their own customers’ execution gaps

The old proverb — about leading a horse to water — has a corollary: the horse won’t be grateful to the person who showed them the pond.

This is a problem for vendors who provide SaaS, AI & services which deliver insights to their customers. If customers don’t, won’t or can’t act on those insights, then how much value will they place on the source of those insights? How long will it take them to ask:

Hang on. Why are we paying so much for this?

There are some fascinating insights businesses which I’ve used as a customer, recommended to my own clients, and seen up close as a consultant. More-and-more vendors are now using AI to deliver remarkable capabilities. For example:

Predictive churn analysis on utility customers (e.g. identifying telecoms subscribers who are most likely to churn)

Predictive healthcare / diagnostics

Predictive maintenance on industrial equipment

Online ad campaign optimisation

Optimising metal and mineral processing/manufacturing plants

Fraud detection in banking and payments

Detection of legal or compliance risks

Cost prediction in insurance

These propositions often combine SaaS with AI and a human-in-the-loop service element. The more deeply I’ve looked into and worked with these companies, the more I’ve come to appreciate:

How AI-derived insights can be exceptionally valuable or even transformational to a business

The degree to which that business can fail to benefit because there are so many hurdles to get those insights actioned effectively or at all.

Recently, I’ve seen this insight-but-no-action problem affect a series of clients and prospective clients, whose products use AI to generate insights for their customers.

The consequences of this problem are:

There’s a big mismatch between what vendors want to charge, and customers’ willingness to pay. The impact on SaaS pricing strategy is substantial.

It’s harder for vendors to close deals with prospects

Proof of concept (PoC) deployments don’t deliver the expected impact

This problem is not unique to AI companies, and some of this analysis is equally applicable to insights generated without AI. But there are aspects to this problem which are inherent to AI-derived insights because they deliver a step-change in the quantity and quality of actionable inputs available to the customer. The recent surge in usecases for AI-derived insights — and the corresponding jump in new propositions created for that purpose — mean this problem will grow more acute and occur more frequently.

This is becoming such a common problem for startups & companies who provide AI-derived insights & analytics products, that, in addition to my other posts on pricing & product strategy, I’m writing a series of posts to define & explain the problem, and discuss what vendors can do about it.

The AI insights-to-actions loop

This is the traditional insight pyramid or “DIKW pyramid” which is usually credited to Russell Ackoff [^1].

It is beloved by some analysts and the foundation of hundreds of Medium posts, ranging from sensible to silly. I’m not going to critique it. Rather, I’ve been thinking a lot about it because what the model lacks (and this is not a criticism) is an explanation of how wisdom or knowledge get connected to actions”2.

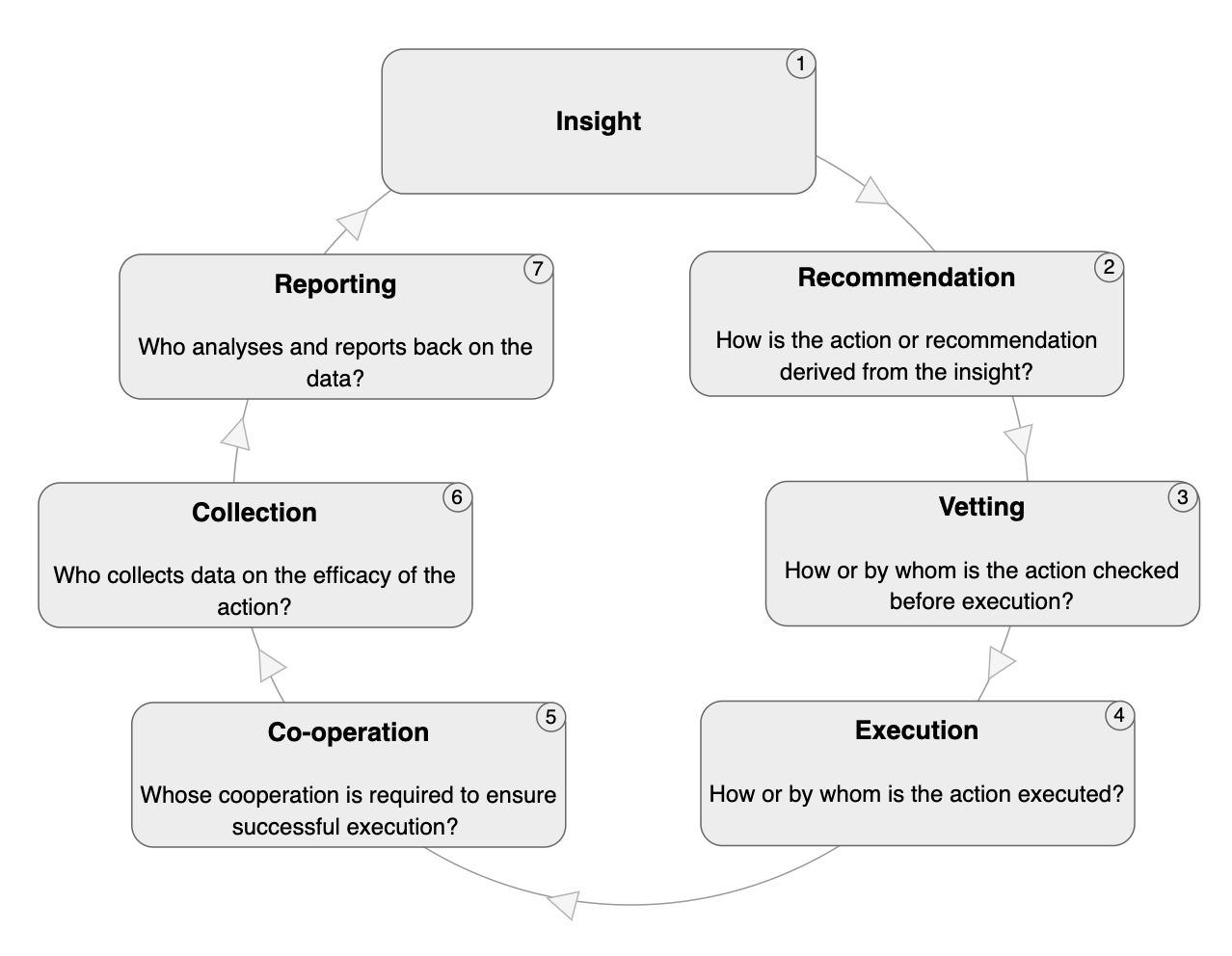

It’s helpful to think about the connection of insight to action as a series of steps:

“Insight” is generated

A recommended action is derived from the insight

The recommendation may need to be checked or vetted before it gets executed

The recommended action is executed

Execution of the action can require the cooperation of third parties

Data is then collected on the measurable impact/efficacy of the action

That data is collated and reported, and can be fed back into stage 1 to improve the quality of the insights

If step 7 feeds back into step 1, then you’ve got a loop

Each of those steps masks a range of options about how the step is implemented and — especially — who is doing it.

Each step in the loop is another pyramid

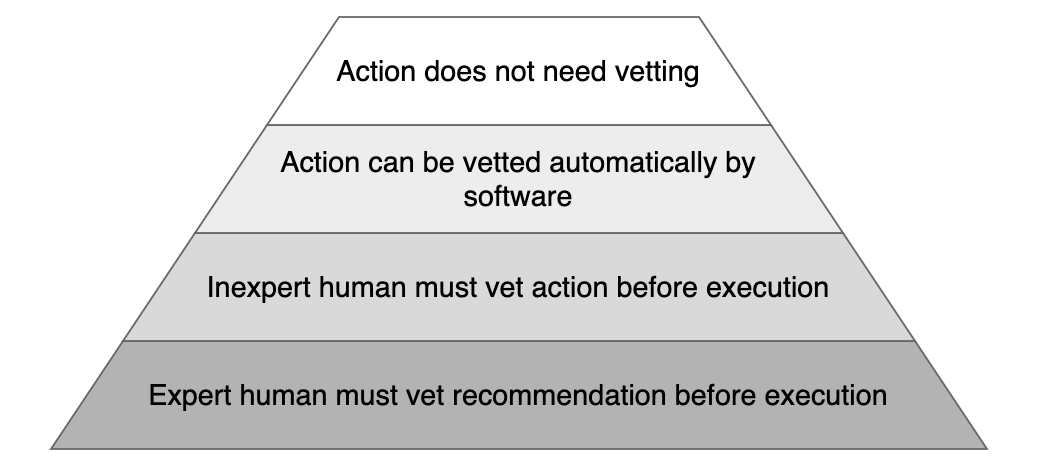

Each step in the loop can be represented as a DIKW-style pyramid. As we move up each level of the pyramid, we:

Reduce the reliance on human expertise

Reduce the marginal cost of each action

Increase the probability an action will be executed

Increase the probability any executed action will result in efficacy data

Let’s walk through the loop, step-by-step.

Step 2: How the action is generated/recommended

What happens after the insight is generated? As the vendor, does your proposition also generate an accompanying recommended action? If not, then:

Who generates the recommendation?

How much expertise do they need?

How much work will they need to do to generate that recommended action?

For example, let’s say you’re a vendor who provides an AI solution which analyses cellphone subscriber data (billing, usage, customer support etc), and then identifies subscribers at an elevated risk of churning to a rival network. Is the recommendation automatically derived? E.g. get a Customer Retention rep to call every at-risk subscriber. Or does it require a human to decide which customers require action, and select the correct action from a menu of options?

Step 3: How the recommendation is checked or vetted before execution

To what extent does the recommendation need to be checked or “vetted” before it is executed? Is it automatically handed off to another system which can check it, or is a human needed in the loop? If a human is involved then how much expertise do they need?

Following on from the cellphone subscriber retention example above. If the system can automatically recommend specific actions per subscriber, does a network employee need to manually check/approve each recommended action before it’s executed?

Step 4: Who executes the action?

How is the recommendation actually executed? Is it all done in software, or does a human need to be involved? And, as before, how much expertise does that person need?

For cellphone subscriber retention: does the CRM automatically generate the relevant email offers and then send them? Or does a human rep need to make a call? Is the rep a trained retention specialist, or any call-centre employee?

Step 5: Who must cooperate to make the action effective?

A requirement for third-party cooperation adds another hurdle. If another team or organisation is brought into the loop, then why & how are they motivated to do what’s asked of them? How does your request fit into their to-do list?

It is not a safe assumption that your priority will be their priority.

For example: if the cellphone network pushes responsibility for outbound customer retention calls to one of their customer service call centres, then how are the staff there incentivised to undertake and care about outbound retention work relative to their other responsibilities? To what extent will those staff be trained regarding the reasons a specific subscriber is identified as a churn-risk and the appropriate actions to take?

As the vendor of the original insight, there is a risk here that you end up worrying about things you cannot possibly control. There are limits to how deeply you can involve yourself in your customer’s operations, and you can drive yourself crazy with your customer’s dysfunction.

BUT… if your customer is routinely actioning insights inappropriately — or not actioning them at all — because the notionally responsible team can’t or won’t do it properly, then your customer will place a correspondingly lower value on your proposition as the source of that insight. It won’t even matter if your buyer knows or understands the source of the blockage: they won’t get the impact they’re hoping for or the ROI you promised them.

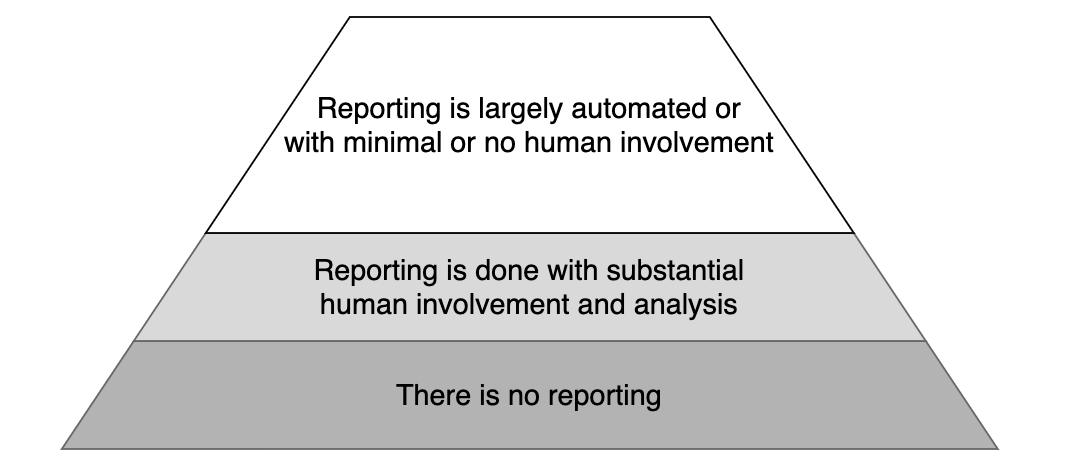

Steps 6 & 7: Who (or what) collects the data on the efficacy of the action? Who analyses and reports back?

Data collection

Data reporting

Automated data collection and reporting is ideal but not always possible. If outcome data collection, analysis and reporting is not part of an automated closed loop, then it won’t necessarily be done properly nor to a standard which enables the customer to perceive value and the vendor to prove ROI.

Data collection, analysis and reporting is a key issue to be addressed prior to customer implementation. As the vendor, you will want to know:

How data is being collected?

Who is responsible for collecting it?

Who is responsible for collating, analysing and reporting the data?3

The vendor’s access to / visibility on that data

The danger of stopping at the start of the AI-insights loop

Colour-coding our loop should hammer home how & why vendors are so vulnerable to seeing their insights’ value go unrecognised.

It’s possible to look at this and conclude vendors should focus on propositions which only sit at the top of each pyramid4. After all, the more that’s left to the customer, and the less that’s automated, the greater the opportunity for failure.

But implementing and automating an entire closed loop is obviously unfeasible or impractical in so many domains.

Rather than chasing an impossible goal, vendors can increase the probability of success by paying attention to the context in which those AI-derived insights will be applied. I.e.

In as much detail as possible, what happens after an insight is delivered?

What is our understanding of how the customer will implement each step of the loop? Has the customer even thought through those steps?

What are the “failure modes” by which the customer will not action those insights? Either at all, or not how the vendors wants/expects?

As the vendor, what can be done to mitigate those points of failure?

Where do we go from here?

There is a lot more to dig into here. I’m going to follow-up on:

How and why customers can perceive the ROI from AI-derived insights so differently from vendors

Why cheap substitutes (rather than high-tech competitors) are so problematic

How AI-insight proof-of-concept deployments can end up failing to prove anything

What vendors can avoid and mitigate these challenges, and what they can do to maximise the perceived and actual benefits of their proposition

––––––––––––––––––––––––––––––––

1 The DIKW Pyramid. https://en.wikipedia.org/wiki/DIKWpyramid

2 As I said, this is not a criticism of the model. Ackoff does position wisdom as knowledge applied in action. I.e. action is implied, but necessary details are not part of the model.

3 And, as with so many other variables in this loop, how are those people incentivised to do the job properly?

4 I.e. Wisdom -> Software recommends an action -> Action can be executed without vetting -> Action can be executed by machine -> Action success is not dependent on third party cooperation.